You have to be obsessive compulsive to avoid the privacy landmines that companies like OpenAI and Anthropic spread throughout their products.

Yesterday I wrote a blog post about how the “How is Claude doing this session?” prompt seemed like a feature just designed to sneak more data from paying Claude users who had opted out of sharing data to improve+train Anthropic’s models. But, in that post, I could only theorize that even tapping “0” to “Dismiss” the prompt may be considered “feedback” and therefore hand over the session chat to the company for model training.

I can confirm that, tapping “0” to Dismiss, is considered “feedback” (a very important word when it comes to privacy policies). When doing so, Claude says “thanks for the feedback … and thanks for helping to improve Anthropic’s models”. (This is paraphrasing because the message lasted about 2 seconds before vanishing.) Obviously this is NOT what I or others are trying to accomplish by tapping “Dismiss”. I assume this is NOT a typo on the company’s part, but I’d be interested in having a clarification from the company either way. I would wager a fair case could be made that classifying this response as (privacy-defeating) “feedback” runs afoul of contract law (but I am not a lawyer).

Anyway, I clicked it so you won’t have to: I would not interact with that prompt at all, just ignore it.

Original post:

Assume that “How is Claude doing this session?” is a privacy loophole

I am a power user of AI models, who pays a premium for plans claiming to better-respect the privacy of users. (Btw, I am not a lawyer.)

With OpenAI, I pay $50/month (2 seats) for a business account vs a $20/month individual plan because of stronger privacy promises, and I don’t even need the extra seat, so I’m paying $30 more!

Yet with OpenAI, there is this caveat: “If you choose to provide feedback, the entire conversation associated with that feedback may be used to train our models (for instance, by selecting thumbs up or thumbs down on a model response).”

So I never click the thumbs up/down.

But I’m nervous… Notice how that language is kept open-ended? What else constitutes “feedback”?

Let’s say I’m happy with a prompt response, and my next prompt starts with “Good job. Now…” Is that feedback? YES! Does OpenAI consider it an excuse to train on that conversation? 🤷 Can I get something in writing or should I assume zero privacy and just save my $30/month?

I was initially drawn to Anthropic’s product because it had much stronger privacy guarantees out of the gate. Recent changes to that privacy policy made me suspicious (including some of the ways they’ve handled the change).

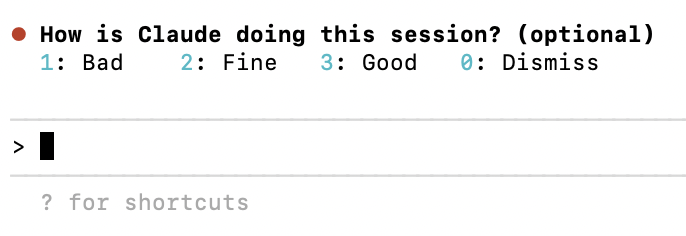

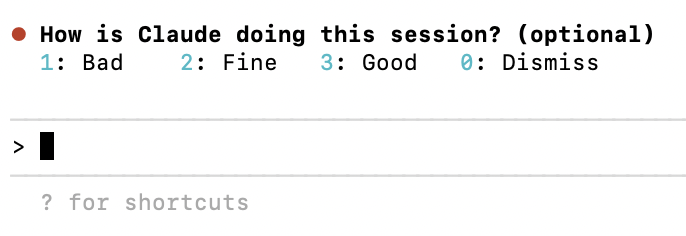

But recently I’ve seen this very annoying prompt in Claude Code, which I shouldn’t even see because I’ve opted OUT of helping “improve Anthropic AI models”:

What are its privacy implications? Here’s what the privacy policy says:

“When you provide us feedback via our thumbs up/down button, we will store the entire related conversation, including any content, custom styles or conversation preferences, in our secured back-end for up to 5 years. Feedback data does not include raw content from connectors (e.g. Google Drive), including remote and local MCP servers, though data may be included if it’s directly copied into your conversation with Claude…. We may use your feedback to analyze the effectiveness of our Services, conduct research, study user behavior, and train our AI models as permitted under applicable laws. We do not combine your feedback with your other conversations with Claude.”

This new prompt seems like “feedback” to me, which would mean typing 1,2,3 (or maybe even 0) could compromise the privacy of the entire session? All we can do is speculate, and, I’ll say it: shame on the product people for not helping users make a more informed choice on what they are sacrificing, especially those who opted out of helping to “improve Anthropic AI models”.

It’s a slap in the face for users paying hundreds of dollars/month to use your service.

As AI startups keep burning through unprecedented amount of cash, I expect whatever “principles” founders may have had, including about privacy, to continue to erode.

Be careful out there, folks.